Top Down (Downward) Causation

Major rewrite 02-01-20

This page started as a (very) stripped down version of the chapter written by Keith Farnsworth, George Ellis and Luc Jaeger (2017) for "From Matter to Life: Information and Causality", published by Cambridge University Press. Subsequently, Keith has rewritten it to a) fit better with the rest of the website and b) to connect it with the relational biology of Robert Rosen and his followers. So now, what you will find here is the first unification of the concept of downward causation (from Donald Campbell, 1974; Denis Noble (2012); George Ellis (2012) and others), with the theory of Relational Biology (originating from Nicolas Rashevsky's use of category theory and developed by Robert Rosen from the 1960s to the 1990s and subsequently by Aloisius Louie) all within the paradigm of information thinking. We recommend you read the pages on Causation and Emergence before this one. This page on Downward Causation should be read in conjunction with the one on Transcendent Complexes, Information and Emergence - these two pages are effectively partners.

Preparation

If you are willing to believe in hard emergence, then you will agree that the organisation (embodied information) at some whole system level can constrain at least the behaviour of the system as a whole. To put it another way, the configuration of the system (e.g. the way a machine is put together) determines, at least in part, its behaviour. The idea of downward causation is that this constraint can also operate on the constituent parts of the system. When examined, this seemingly simple idea opens up a whole world of surprising consequences and it is of course, highly controversial, but mostly ignored by the majority of scientists (since it is a further step beyond hard emergence). Clearly downward causation presupposes causation, and that in itself is contested. Although cause seems intuitive, we must immediately distinguish between several different meanings of the word and then realise that all of conventional science deals with only one of these meanings and that this one is difficult to justify within the most fundamental physics we have available. So, before we get to downward causation, let us first very briefly review what is meant by causation.

What do we mean by cause?

At least three different things. A fuller account of causation is provided on our webpage of that title, so here we provide just a small and simple introduction.

If you push something and it moves, we can all agree that you caused it to move. If a rock is split by ice on a mountain side and part of it rolls down, crashes into snow, which then escalates into an avalanche we do say that the ice caused the rock to split, gravity caused the broken part to fall and its collision with the snow caused the avalanche. However there is already a mix of ideas in that chain of events. And why did the ice split the rock and why was the ice there in the first place, and also the rock? To answer these (and more) questions properly, we have to think beyond the familiar (what Philosophers sometimes call "medium-sized hard goods") and delve into the physical fundamentals. Here at the level of fundamental forces and particles, physical equations are symmetrical: there is nothing to distinguish between the agent of causation and its recipient. In fact, it looks as though cause doesn't really exist at all, and things just happen. Well, they must happen for a reason, we might protest, and surely at least the fundamental forces are the reason. Yes, and this reason refers to just one kind of cause, it is the set of rules that determine exactly what happens when something does happen.

It is an example of what is called formal cause: colloquially that "these things happen because of the way things are". The fundamental forces, being what they are, formally cause fundamental particles to do these things, because of what the are. You will notice that is not all. What happens also depends on where things are, in time and space. That, you will recall, is embodied information. Information embodied as the form of things is part of formal cause (the other part is literally the nature of the four fundamental forces and the set of particles and their quantum characters). Historically another kind of cause, explaining consequences by referring to the substances of the things taking part, such as the rock being hard and brittle, the water seeping into it, expanding as it froze, were referred to as material cause. The classic example is that of clay which can be molded and wood which cannot - it is carved. We can see that this is in fact a kind of micro-formal cause in every day objects. The same is true of chemistry, where the combination of substances (e.g. ethylene molecules forming polyethylene) is material cause, but deeper down, on the micro-level, is formal cause.

The idea that an action causes a result refers to another kind of cause altogether. It is termed efficient cause and this is what we had reason to doubt a moment ago. Despite that, almost all practical scientific work with causes refers only to this efficient cause. One of the prerequisites of it is a temporal sequence: axiomatically, efficient cause always precedes its observable effect. This creates a serious problem when we have to consider circular causation of the form A->B->A, (-> representing 'causes'), which is, on the face of it, impossible because it violates the order of precedence (we will take that issue up on the circular causation page).

What we are not allowed to do in science (because of its foundational doctrine of objectivity) is refer to, or even consider, the purpose for which an action occurs: that is its final, i.e. ultimate cause. In the every-day world, this is an obvious category of causation, specified as one of Aristotle's four causes. But its implication is subjective in that a 'reason', in the sense of a purpose, implies the point of view of one having a stake in the outcome and that is beyond the pale for science. (It is a shame that the exceptionally important insight Robert Rosen had into the failing of this doctrine and how it should be overcome has been largely ignored by scientists, and biologists in particular).

Concepts of cause are often expressed in a different language to focus on a different point of view. We might say that causal power is attributed to an agency which can influence a system to change outcomes, but does not necessarily itself bring about a physical change by direct interaction with it. In an easily grasped analogy, the Mafia boss says his rival must be permanently dealt with (the boss has causal power), but his henchman does the dirty deed. The action of the henchman is physical and dynamic and the henchman is logically described at the same ontological level as his victim (it is not the cartel that kills the rival, not the rotten society, not the atoms in the henchman’s body, but the henchman himself). A dynamic, physical cause linking agents of the same ontological level is what we referred to as an efficient cause, following Aristotle (Falcon 2015). So, when a snooker ball strikes another it causes it to move, and that is an efficient cause. But the laws of physics that dictate what will happen when one ball hits another are not efficient causes, even though they do have causal power (as such they are called formal causes). It seems that efficient cause is always accompanied by a transfer of some physically conserved quantity (energy, or momentum): this is the only way in which a physical change can take place in the physical universe. However, for an efficient cause to be realised, formal causes must also exercise their power. Auletta et al. (2008) introduced these distinctions as a preamble to explaining top-down causation in the biochemical processes of the cell (cellular 'operating system') and the same distinctions are essential for what follows here.

By definition top-down causes are generated at a different (higher) ontological level than that at which they are realised via an efficient cause. This leaves open the possibility (at least) that if downward causation exists, it is a (special) case of formal cause. If formal cause is constraint by information, then it can exert causal power, without having to be the efficient cause of something. That would be very good, since next we see that life itself presents

Bear in mind that from an information point of view, using our definition of information as embodied in the configuration of matter/energy in space/time, the real source of cause is the formal (information) constraint on the actions of physical forces to produce efficient causes. Given this perspective it is not so difficult to conceive of a set of constraints associated with a configuration instantiated at a system level, that causes actions or behaviours, including the formation of new configurations at the level of its constituent parts. That is the essence of downward causation.

By now, you may be wondering why we are bothering to define and identify downward causation. The reason, next to be revealed, is that understanding living systems demands something at least like it because they pose a major stumbling block for any concept of linear chains of causation.

Actions without causes?

Philosophers (for example, struggling with the question of 'free will') call each link in a chain of causal explanation, one of transient causation: it is transient because cause is merely transferred through it in finite time, not so much as force is transferred through links in a chain, more as a boat is passed through a ladder of locks on a canal. Following these transient links back, one always returns to the basic laws of physics and (currently) to the ‘big bang’, from where our understanding runs out. Most philosophers are interested in causes that include the animate, especially the explanation of human actions and for this, many of them maintain (though do not necessarily agree with) a notion of agent causation which is the point we arrive at when a living organism appears to create a cause spontaneously, breaking the chain that leads back to fundamental physics. This notion describes the appearance of an action by an agent, the cause of which is the agent itself: it seems to act without a prior cause. Indeed this apparent agent causation is one of the mysteries common to life: it seems to have the ability to spontaneously generate events, as though it possessed something like free will. Here we are interested in what lies behind such weirdness (and we elaborate on this with our autonomy page).

At this point we have two (hypothetical) sources of causation: the first is the beginning of a chain of physical (efficient) causes (constrained by their accompanying formal causes) that leads back to fundamental physics. The second is this mysterious agent causation. There is, finally, a kind of cause that is, perhaps, a diagnosis of elimination. In many cases things happen at random and underlying true (as opposed to merely apparently) random events, lies the quantum property of spontaneous random variation. At the macro-scale of familiar things, randomness also manifests through thermal noise, though more deeply this is just the result of our being unable to predict the outcome of huge numbers of particles interacting (e.g. the molecules of a hot gas). In either case, we can refer to randomly spontaneous cause and it is noteworthy that most of the information presently in the universe, that is available to create formal cause, derived from this source material, rather than being present at the beginning (big bang) stage*. A long history of bootstrap pattern formation - information creating information - has ensued since. In the case of transient cause, the information at the end of the chain is to be found in the physical rules of the universe. The ‘selection’ of these particular laws and fundamental constant values, from among all possible, is what makes the universe what it is. The cosmologist and mathematician George Ellis, (currently one of the leading thinkers in top-down causation) points out that the necessary simplicity of the early universe, relative to its present state, means that this cannot account for all causes. (Indeed such an explanation would constitute a kind of pre-formationism - the term used to describe the faulty belief, once held, that living things were already fully formed, but miniature versions, in the zygote).

*As we argue elsewhere, randomness is the phenomenon of spontaneously introducing new ‘information’ into the system. Thus the random ‘action without a cause’, is itself derived from information, in this case non-structured, i.e. ‘random information’. This kind of information finds a use as the raw material for selection and therefore pattern formation, from where form, complexity and, in living systems, function may be developed.

So, in trying to account for what appear to be spontaneous actions, philosophers often come up against ‘actions without causes’ where the chain of transient causes seems to halt. If (for the sake of argument) there really is no cause whatsoever, then two things must be true: first that there must be more than one possibility (because if there were only one, then the action would be wholly determined and we would step directly on to the next link in a causal chain); second, that there must be no way (mechanism or set of circumstances) for choosing among the options (because if there were, then that ‘way’ would be sufficient to account for the cause). In this situation, we seem to be left only with a random choice of the path to follow. However, philosophers who believe (all be it uncomfortably) in agent causation, insist that it cannot be reduced to random action (for then it is clear that the source of causation is not the agent itself, but rather is an independent random process). Nothing can act 'at random' without devolving its choice to an independent (hence external) random process (as we do when we throw dice).

Having established the physical foundation of the universe as one source of cause, and randomness as another, we now need a third source of information to explain this most puzzling source of causation (i.e. agent causation). We might speculate that this one is peculiar to living systems, not found elsewhere (excluding human creations, since they are logically dependent on living systems). There is no such thing as an action without a cause - if it looks like that, then it is only because we have not yet identified the cause. When the cause appears to be an autonomous agent, then somehow information embodied by that agent is constraining physical forces that emerge from the agent to enact apparently autonomous behaviour. The simplest (and most fundamental) case of this sort of thing is the cybernetic control system.

Cybernetic control systems (with a

biological example)

The easiest way to appreciate agent causation, is in fact through a

human creation, namely a thermostatically controlled heating or cooling

system, which is a practical example of a cybernetic system. The

disarmingly simple, but highly significant feature of it is that the

thermostat must have a set-point

or reference level, to which it

regulates the temperature. That set-point is a piece of information and

what is different about it is that it is not obviously derived from

anything else: it is apparently novel information that is not random.

Of course in a heating system, this information was introduced by human

intervention and in the case of an organism’s homeostatic system

regulating e.g. body temperature, or salt concentration, it was

presumably selected for by evolution, but both of these explanations

conform to the notion of a piece of new information being introduced

into, or by

living systems. The set-point is not seen in abiotic systems, though

natural dynamic equilibria are abundant, for example the balance

between opposing chemical reactions and the balance between thermal

expansion and gravity maintaining a star. For these systems, the

equilibrium point is a dynamic ‘attractor’ and the information it

represents derives from the laws of physics: these are not cybernetic

systems. Only in life do we see a genuine set-point which is

independent, pure, novel and functional information.In a thought experiment to illustrate the problem, Paul Davies puts it this way: consider the difference between flying a kite and flying a radio-controlled toy plane. The former has physical (efficient) causation: it is obvious how a tug on the string directly causes physical changes in the behaviour of the kite, in the sense that all we have are physical forces and these (to a good approximation) obey Newton’s laws of motion, or more generally Hamiltonian mechanics. But the toy plane is controlled via a communications channel: the only way you can influence it is via pure information (in this case communicated via modulation of a radio signal) and there is no place for this in the Hamiltonian description of the system. How, then, can this pure information determine the behaviour of the physical object that is the plane? To understand it better, let’s simplify even further and think of a remote control that just switches a lamp on and off. The remote control may send a pulse of infra-red light to a tuned photo-detector which, by the mechanism of receiving it, generates a small electrical current: enough to move an electromagnetic relay switch and turn the lamp on.

It now seems that we have reduced the problem to a continuous chain of physical phenomena, but more careful thought reveals that this is only the case because the whole system was specifically designed to use the information from the remote control in one very particular way. Indeed, it does not matter that the (one bit of) information was sent by light, or radio, it could have been by wire (and it is true that switched signals on wires, turning other switches on and off, is the basic hardware ingredient of a digital computer). The, at first hidden, but essential ingredient of this control by pure information, is the design of the system in which it operates. The design gives the control information a context, without which it would have no ‘meaning’ in the sense that it would not be functional. Elsewhere we argue that functional information is that which causes a difference: we can now interpret that as meaning it has causal power. The pure information and the regulated system of which it is a part are inseparable. This idea can be generalised to any control system: the information causes physical effects only because it is embodied within the physical system which gives it the context necessary for it to become functional. We may even go so far as to say that design is the embodiment of functional information in the physical form of a system. Crucially, design is a non-random, specific configuration of components. This is, of course, information. It is also a set of constraints on what any forces applied through and among the components can do. The information embodied in the system's structure is the constraint that produces formal cause.

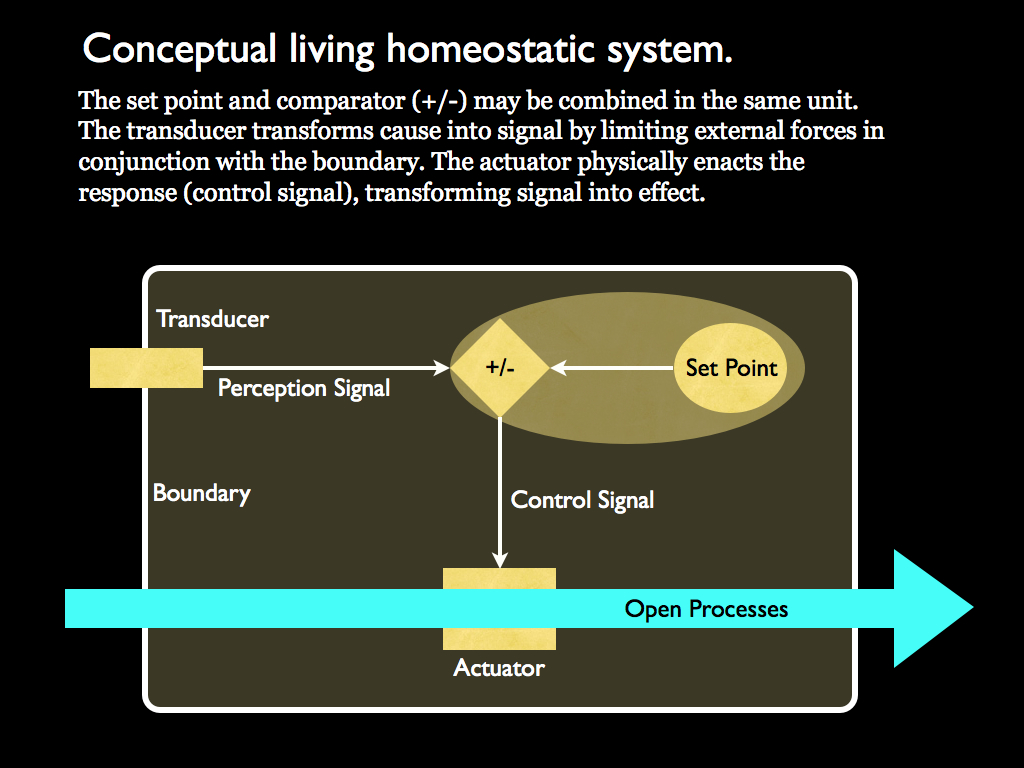

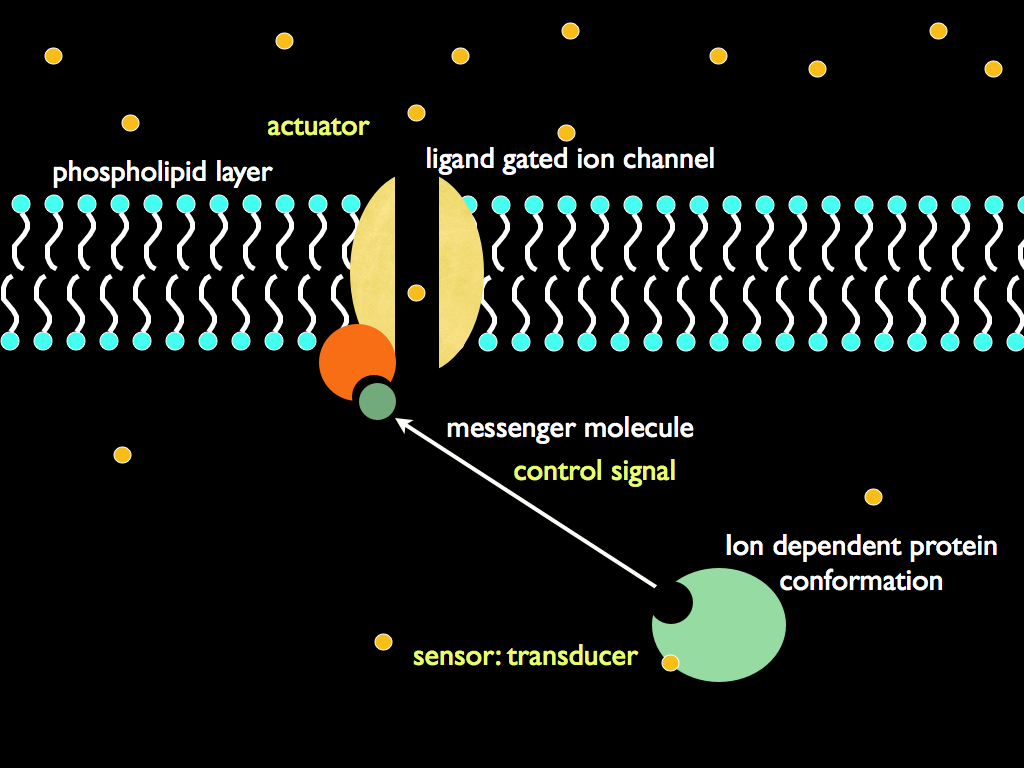

For information control to work in a cybernetic system, there must be a part of the system that performs a comparison between the current value and the set-point. This action is one of information processing (e.g. subtraction), which can be performed by a molecular switch, just as well as it can by an electronic switch in a computer. The osmoregulation of a bacterial cell is one of the most basic tasks of homeostasis in biology, so makes a good example (reviewed by Wood, 2011). It is far from simple in practice for real cells, with not a single molecule, but several cascades of molecular switches in operation, working differently to up-regulate and down-regulate the osmotic potential of the cell. To quote from Janet Wood’s review:

“cytoplasmic homeostasis may require adjustments to multiple, interwoven cytoplasmic properties. Osmosensory transporters with diverse structures and bioenergetic mechanisms activate in response to osmotic stress as other proteins inactivate.”

To take just one example, channels formed from proteins embedded in the cell membrane literally open and close in direct response to the internal osmotic potential, and these are crucial for relieving excess solvent (water). Crucially, the set-point is to be found in the shape of these molecules: they are physically structured (we won't say designed) so as to embody it. We do not know where this information came from (the usual, but in this case unconfirmed, answer is natural selection), but here, the important point is that we now see how it influences the physical world. It is not a mystery at all: the set point is embodied in a physical object (the shape of a protein) and this protein shape directly affects the flow of small molecules through the cell membrane. Pure information embodied in molecular structure has causal power within an osmoregulation system. Embodied information acts as formal cause.

Now, consider this: the cell is composed of its outer enclosing membrane, complete with trans-membrane proteins such as the one just described, together with the cytoplasm containing a load of other proteins, information carrying polymers and other organic molecules. It is not a random bag of chemicals, but always whilst alive, it is a highly organised and coordinated functioning system. One of its primary constituents is the water which acts as a solute. The amount of water is regulated by the homeostatic system we just mentioned. The whole of this system (transducers, comparator, set-point, control signal carriers and actuators) exists at a level of organisation above that of the water, indeed in reality the water is a constituent of this system. What we are seeing, therefore, is a system (at level N) being the formal cause for an efficient cause acting on a subsystem of it (level N-1). This is the reason for being interested in downward causation.

Generalising downward causation

So far, so good, but there is far more to downward causation than cybernetic systems and their set-points. George Ellis counts such systems as one of five different mechanisms of 'top-down' causation. More generally, he (and we) recognise causation from one level of aggregation to another in the modular organisation of nature: top-down, bottom-up and same-level. In Ellis (2011) he identifies modular hierarchical structuring, as the basis of all complexity, leading to emergent levels of structure and function based on lower level networks. Quoting Ellis from that paper:

“Both bottom-up and top-down causation occur in the hierarchy of structure and causation. Bottom-up causation is the basic way physicists think: lower level action underlies higher level behaviour, for example physics underlies chemistry, biochemistry underlies cell biology and so on. As the lower level dynamics proceeds, for example diffusion of molecules through a gas, the corresponding coarse-grained higher level variables will change as a consequence of the lower level change, for example a non-uniform temperature will change to a uni- form temperature. However, while lower levels generally fulfill necessary conditions for what occurs on higher levels, they only sometimes (very rarely in complex systems) provide sufficient conditions. It is the combination of bottom-up and top-down causation that enables same-level behaviour to emerge at higher levels, because the entities at the higher level set the context for the lower level actions in such a way that consistent same-level behaviour emerges at the higher level”.

In order of sophistication, the five mechanisms of top-down causation identified by Ellis are:

1) deterministic, where boundary conditions or initial data in a structured system uniquely determine outcomes;

2) non-adaptive information control, where 'goals' determine the outcomes;

3) adaptive selection, where selection criteria 'choose' outcomes from random inputs, in a given higher level context;

4) adaptive information control, where 'goals' are set adaptively; and

5) adaptive selection of selection criteria, probably only occurring when intelligence is involved.

We can translate these mechanisms into more biological terms.

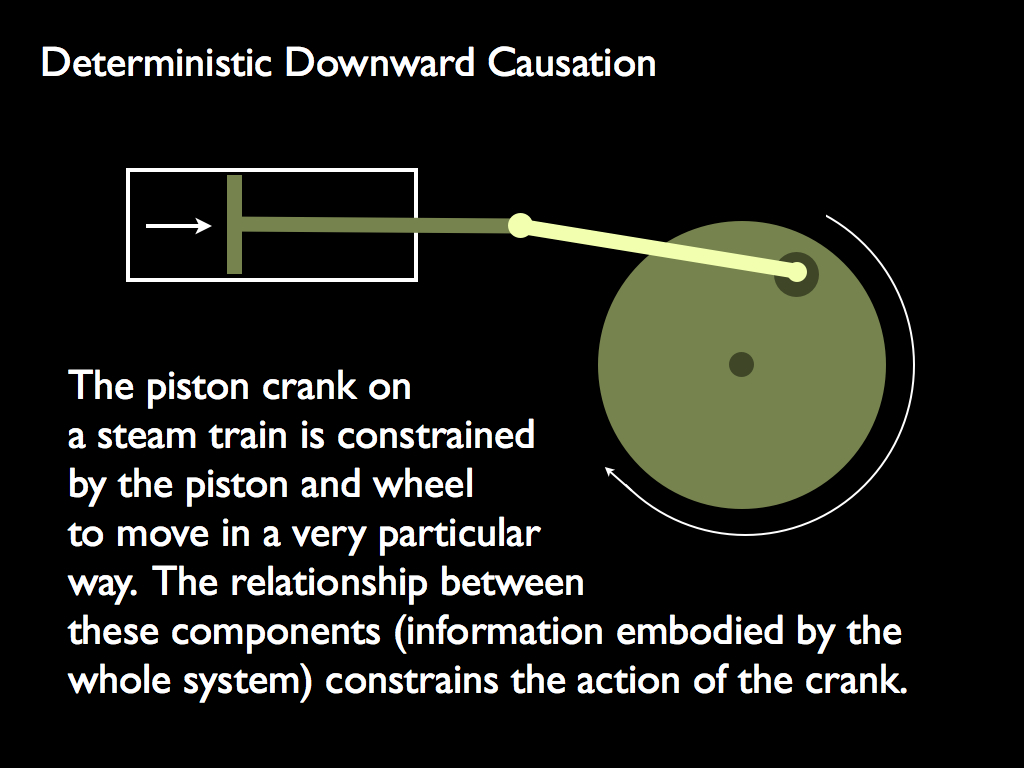

Only the first is independent of life: it describes the determination of behaviour in a subsystem by the organisation of the system of which it is a part. The classic example is the movement of a mechanical part such as the piston crank illustrated below (Ellis himself chose the digital computer as an example). Note how the spatial configuration (embodied information) is the constraint of the component's movement and hence is the formal cause and that this spatial configuration belongs to the system (level N), not the component (level N-1) (because it matters where the piston and the drive wheel are located).

However, this being a human artifact (technology), it is not strictly independent of life. We might instead think of the direction taken by the North Atlantic drift current on its way from the Gulf of Mexico towards the Baltic Sea: its direction is determined, not only by the thermodynamic force generated by temperature gradients, but also by the shape (embodied information) of the ocean floor and the flow of glacier meltwater from Greenland.

The second mechanism immediately brings life into focus because of the word 'goals' (to which I gave the quote marks - they are not in the original text of Ellis, 2011). Elsewhere, we explain that goals are unique to life and specifically a consequence of set-point information embodied in living structure (see pages on autonomy and autopoiesis for an explanation). When George Ellis refers to 'non-adaptive' in these mechanisms, he simply means 'fixed' (adaptive comes next in the context of selection). We can think of this mechanism of downward causation as a homeostatic system which by a fixed set point regulates the constituents of the system itself, taking osmoregulation (water concentration control) of a cell such as an E. coli bacterium as the typifying example. Here, a fixed mechanism for adjusting to a changing (water) environment, operates at the system level to cause the concentration of water molecules to conform to a system-level specification. The goal (set point) is fixed and is information embodied and intrinsic to the system (independent of anything that is not part of the system). Living cells are full of such systems, but we cannot point to a single example of one in the abiotic universe.

In the third, Ellis invokes the 'higher level context' and this is the foundation of the idea of function, since nothing can be functional unless in a higher level context. The selection is often of constituents, or pathways of control. A control system with a switch for two different set-points makes a very simple example. This is the basis of simple action-selection systems such as that found in the protozoans which can perform searching or defending behaviours, depending on the circumstances (their state). Selection of a behaviour, or response, at least implies a repertoire of available responses and if it is not to be random and not pre-determined (fixed) it must be based on a comparison of outcomes, with a goal in mind (goal orientated selection). For this, there must be a measure of 'goodness of outcome' : the objective function on which the goal is a particular point - numerically the set-point value (though it might be implemented as a minimisation of the difference between a perceived value and the set-point).

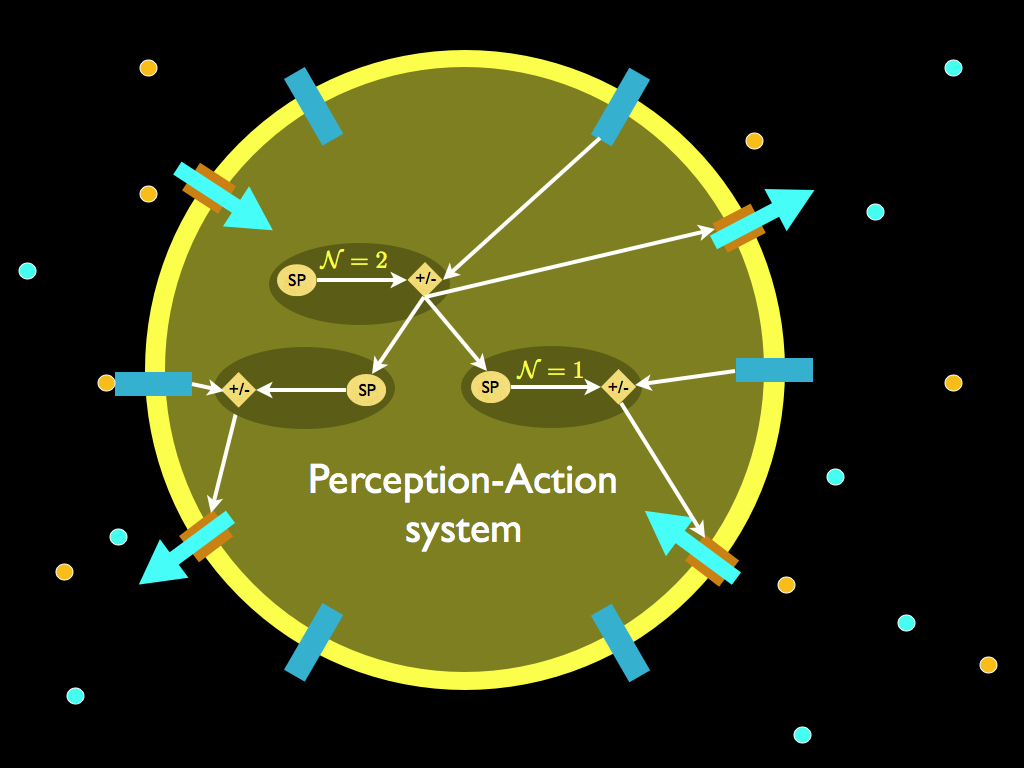

The fourth describes a system in which, at the very least, the set-point of homeostatic control is determined by a higher-level of decision (which may in fact be an outer homeostatic control system). This sort of thing is illustrated on the autopoiesis page and illustrated below in a diagram that explains perception-action systems. Such systems are characteristic of organisms that are higher than prokaryotes (e.g. bacteria), but still single celled, especially the protozoa such as the stentor, which I use as an example of one of the important steps in the development of biological autonomy.

The fifth mechanism is one in which at least two (hierarchical) levels of adaptive control generate the behaviour of the system. Ellis speculated that this probably requires 'intelligence'. Well, that all depends on how intelligence is defined. A good way (not the only one) to define it is 'the capacity to produce a novel and appropriate response to a novel stimulus (or situation). To respond in a way that is not stereotypical, without being merely random, requires the ability to synthesize a behaviour from available components behaviours (making something new out of the elementary parts provided by inheritance). The right components have to selected for their function and for their relation to one another to make a particular composite behaviour. This requires at least one goal orientated selection process (see Ellis's third mechanism). To make it appropriate to its (new) purpose, there has to be an expectation of the outcome (which is expected to be good) arising from the new behaviour in the new situation. For that to happen, the thing performing Ellis's fifth mechanism must be an anticipatory system. Such a system must, by definition, hold a model of itself and its circumstances, from which to predict (anticipate) the effect of any action it takes (i.e. it must have a representation of the trajectory of its state-changes in time that extends beyond the present). For this to work, it must be able to model itself in both the past and the future: in other words it must also posses a memory and this memory will hold information about previous outcomes from a range of previous responses to different circumstances). Put simply, it can learn. We might think that this can all be achieved using an algorithm with some storage capacity, but a key finding (principally due to Rosen again) regarding anticipatory systems is that they are self-referencing in a way that is impredicative - in other words no algorithm, no matter how complex or fancy, can fully contain them (and I will write a new page on all this in due course). Suffice to say now, that given a reasonable definition of (minimal) intelligence, it seems that for Ellis's fifth mechanism of downward causation, intelligence is indeed required (for an example, you can refer to the hypothetical 'free will machine' on the autonomy webpage).

What is the 'top' in top-down causation?

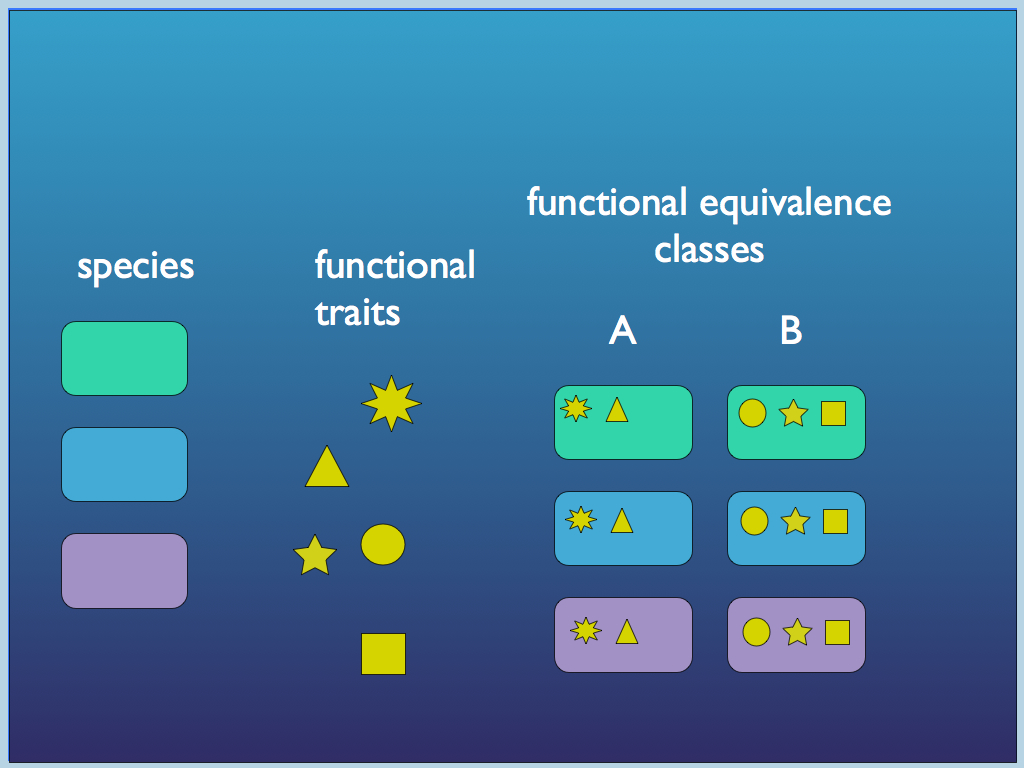

We have argued that downward causation describes formal cause produced by information that is embodied at a higher (system) level of organisation, this having causal power over sub-system levels. The higher level is composed of particular constituent components, along with the information that specifies their configuration relative to one another. The specific identity of each of these components is not important, they are substitutable, since it is only their functions that matter. That is because all systems are multiply realisable: their components come form Functional Equivalence Classes, meaning that the operation of the system only depends on the particular features of the components that make them 'work' in the context of the system. To 'work' in this sense, means to perform the required functions (only) and that can usually be achieved in a variety of ways. For example, a digital computer can be made from silicon junction based components and electrical conductors, or from mechanical valves, pipes and water. We may all be aware that different species of life all need to derive energy from their environment, but have a wide range of chemical processing 'machinery' to do so (there is more on this in the Transcendent Complex page). So, it is not the components parts per se, that embody the information which gives rise to the system level formal cause that we identify with downward causation. What is left, having discounted them, is the organisation of the system level itself. This is not just the configuration of components relative to one another, but also the specification if the functional equivalence classes to which each belongs (equivalent, by analogy, to their functional design specifications). The collection of functional specifications of components (information that is embodied in those components) together with the configuration of them at the system level, is a set of information that exists across both the system and component levels. This information transcends the components in two ways. First it is independent of their specific identity (for example, several proteins can have the same function because they have the same 'active sites', but materially can be quite different to one another, because there are lots of other, functionally irrelevant amino-acids in them). Secondly, because the information is within them and among them, some of it is embodied by the relationships between them, which obviously transcends them as individuals. It is also embodied by a 'complex' of components: a specific configuration of an assembly of different parts. For these reasons I chose the term transcendent complex to label it (and obviously I say more about this on the relevant webpage). To answer the question in the title of this section, a transcendent complex is the top in top-down causation.

Functional Equivalence Classes and modular hierarchy

Ellis explains that course graining produces higher level variables from lower level variables (for example averages of particle behaviours). We would interpret this more specifically by saying that phenomena at a given level of organisation can produce transcendent phenomena, so creating a higher level of organisation, this being the mechanism generating the modular hierarchy of nature. If we start with a coarse-grained (termed 'effective' in some of the literature) view at some level, we are denied information about the details at the fine-grained level below. For this reason, many possible states at the lower level may be responsible for what we see at the higher (which is exactly the micro-state / macro-state relationship we use in statistical thermodynamics). There are, therefore, multiple realisations of any higher-level phenomenon. The multiple ways of realising a single higher level phenomenon can be collected together as a class of functional equivalence: a set of states, configurations, or realisations at the lower level, which all produce an identical phenomenon at the higher.A functional equivalence class is by definition the ensemble of entities sharing in common that they perform some defined function. But a phenomenon can only be functional in a particular context, since function is always context dependent (Cummins, 1975). This context is provided by the TC, which organises one or more of the members of one or more functional equivalence classes into an integrated whole having ‘emergent properties’. The TC is an information structure composed of the interactions among its components. In practice, these make up the material body, which embodies the information that collectively constitutes the TC. It must be described in terms of functional equivalence classes because it is multiply realisable. Crucially, the TC does not integrate the lower level components per se, it integrates their effects, so the TC emerges from functional equivalence classes, not from the particular structures or states that constitute their members. Specifically, a TC is the multiply realisable information structure that gives lower level structures the context for their actions to become functional. It is an aggregate phenomenon of functions. For it to exist a set of components must be interacting to perform these functions; the components must collectively be members of the necessary functional equivalence classes. This thought constitutes a subtle shift that generalises the notion of higher levels from mere course grained aggregates of lower levels (e.g. averages), to the definition of functionally equivalent classes, containing the lower levels as members of the class. Given this concept, we conclude that the existence of multiple lower level phenomena belonging to a functional equivalence class, for example those constituting the form of a bacterial cell, indicates that a higher level function is dictating the function of the lower level phenomena. One might say that the higher level provides a ‘design brief’ for component parts to perform a particular function and it does not matter what these parts are, or how they work, as long as they do the job.

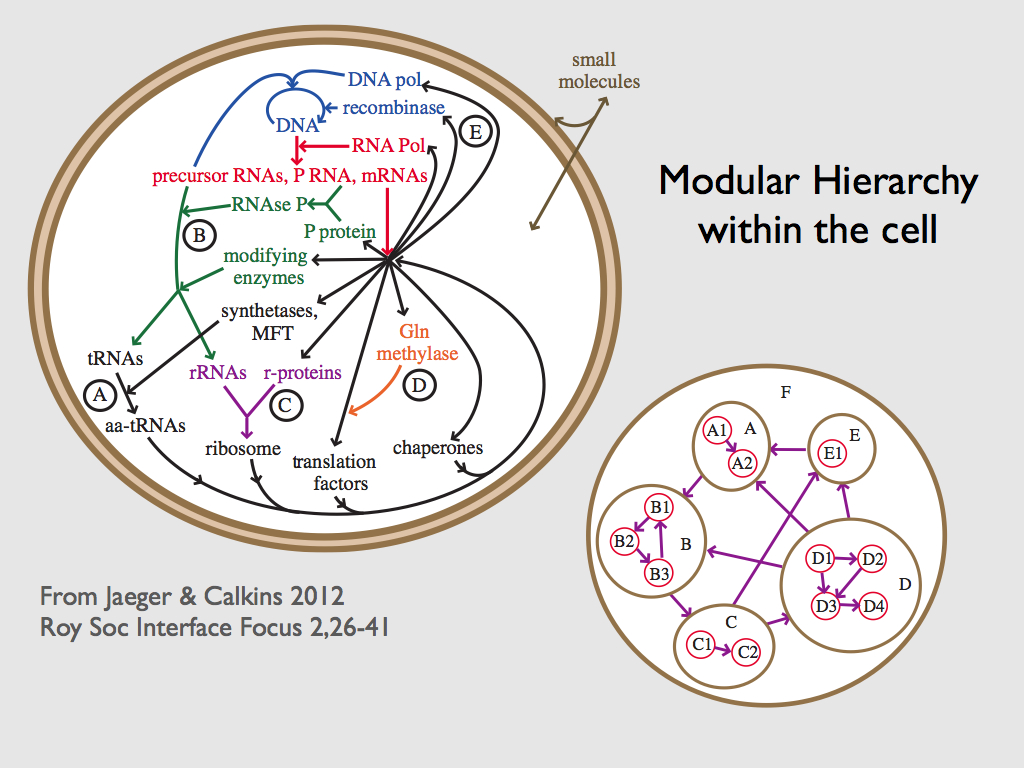

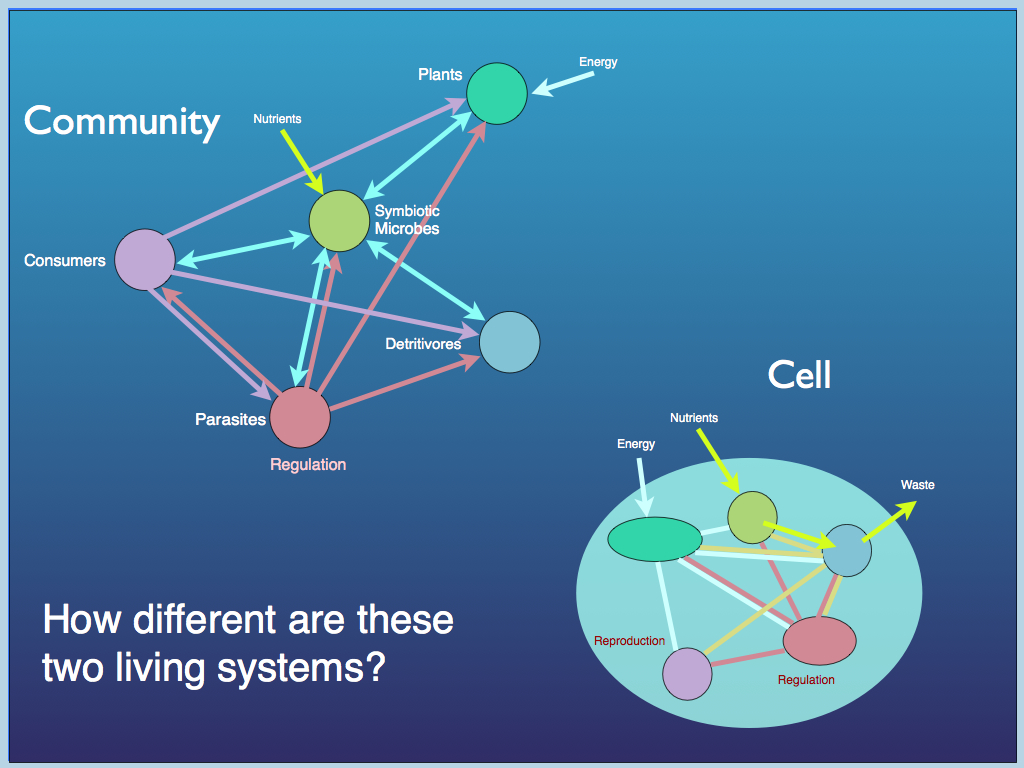

Luc Jaeger and colleagues have described functional equivalence in the molecular machinery of cells (Auletta, Ellis & Jaeger, 2008; Jaeger & Calkins, 2012). They point to functional equivalence among structurally different molecules, folding patterns of RNA-based functional molecules (e.g. RNase) and molecular networks, including regulation ‘complementation’ in which regulatory systems are interchangeable. Indeed, they catalogue a host of examples found in the genetic, metabolic and regulatory systems of bacterial cells. But functional equivalence is only one of three requirements for what they call top-down causation by information control. In this scheme, a higher level of organisation exercises its control, not via setting boundary conditions, but by sending signals (information) to which lower level components respond. The signals represent changes in the form or behaviour of lower level elements that would benefit the functioning of the higher level. This is a kind of cybernetic control, which spans scales of organisation (or ontological levels as these authors refer to them: Auletta, Ellis & Jaeger, 2008; Jaeger & Calkins, 2012; Jaeger, 2015). Within this framework, it is the combination of top-down causation by information control with top-down causation by adaptive selection that enables the exploration of TCs, and according to Jaeger & Calkins, (2012), might characterise life. In general, at any given level of biological organisation (other than top and bottom), there will be more than one TC sharing behaviours in common. These TCs may therefore belong to a functional equivalence class for the TC of the next level up in life’s hierarchy. A nested hierarchy of functional information structures is formed this way (see Bacteria below).

This diagram illustrates the concept of functional equivalence as different species (colour) of e.g. bacteria, carry different sets of functional traits: any organism with the same set of traits is termed functionally equivalent with respect to the ecological community because it no longer matters what the species is, only what the functions are.

This is now a core idea in the cybernetic approach to understanding life. We see considerable diversity at every level of organisation in living systems: most functioning proteins come in a range of different forms (all having a common active site) and genetically determined differences in, for example, the details of ribosomal subunit forms are the basis for species identification from 'environmental DNA' (the mix of DNA molecules found in e.g. a water sample). In detail, cells are not identical, and of course neither are whole multi-cellular organisms. But (and it's a big 'but') they are functionally equivalent (at least is relevant ways), so are in fact substitutable.

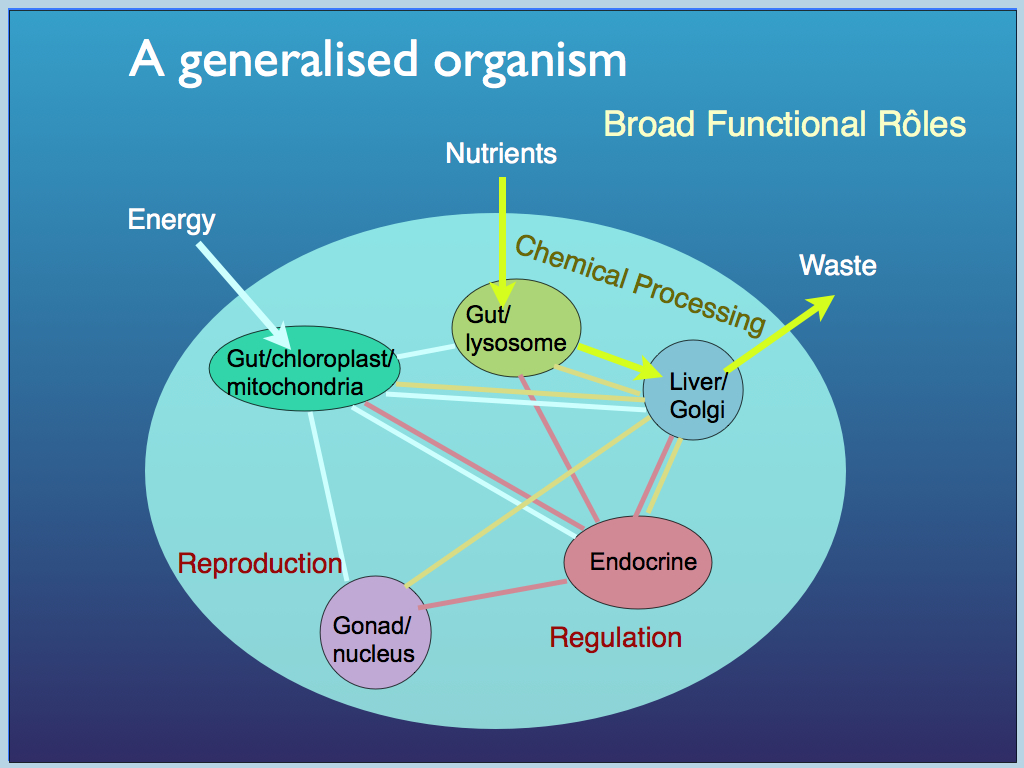

This gives us the idea of a generalised organism (above), in which a set of essential functional roles are present and interlinked in a particular (functional) way to form a coherent whole. There is something obviously missing from that diagram - can you think what it is? Reward yourself if you thought "there's no boundary": of course there has to be an enclosing boundary or tegument as well. The labelling is a bit difficult when one tries to include all organisms, including prokaryotes, auto- and heterotrophs, single and multicellular, etc., which is why some of the labels are multiple. Gut and liver are obviously not present in plants, but they have their equivalents, at least at the cellular level. Flowering plants have gonads (the flowers and fruits), those that do not, reproduce at the cellular level (vegetatively) where the cell nucleus performs the role... and so on. The important point here is that the wide diversity of aparatus found among organisms for performing these broad functions is, at this level of abstraction not relevant: they are all members of functional equivalence classes. It is only when members of each required class are joined in the right way that a working organism can be created. Joining them in the right way (and keeping it together) is the role of the one part that is represented but not labelled in the diagram. That is the ordered set of interactions which collectively makes up the "operating system" of the organism: the TC that gives it wholeness and organism-level function.

A few biological examples

Bees do it.

The democracy used by a bee colony in choosing a new home for the hive (extensively studied by Thomas Seeley - see Seeley 2010) provides a good example. A choice of several potential new homes, differing in quality, are explored by scout bees. Each presents a report to the hive, communicated by waggle dance. Through a network of such communications (pure information), support for the better options builds and refines, until a quorum is achieved, and by this a decision is made. The decision is not that of a single bee, but rather an emergent phenomenon and a property of the scout bees and their communications collectively. Thus the cause of the hive choosing a particular new home exists at a higher organisational level than the effective cause which is the physical action of relocation exercised by each individual bee. The hive as a collective controls the home-location of the individuals. So where precisely is the cause of the decision to be located? Causal power should be attributed to the behavioural repertoire of the individual bees since without that, such decision making would be impossible, but the effective cause is the actual movement of bees on mass. Where they go is determined by which potential new home achieved a quorum of support, which in turn is the outcome of information exchange building mutual information among scout bees. Crucially, this mutual information represents the level of match between the potential home and the hive’s requirements. The hive can be thought of as having a goal instantiated as a (multi-factorial) set-point: pure information. Potential homes are compared to this by the scouts, who by a series of communications of pure information, reach a threshold of mutual information, from which, according to their behavioural algorithm, the specific effective cause (moving to the best-match home) results. Again, crucially, the set-point is not a property of any one bee, it depends on the size of the colony, so its role in determining the outcome necessarily implies cybernetic control exercised by the higher level of organisation upon the lower. We do not yet know how the information describing the colony size is embodied in the bees, but we can be sure that information is the source of causation at every level of abstraction in this example.Even bacteria do it.

Returning to bacteria, Jaeger and colleagues take as axiomatic that unicellular organisms have a ‘master function’ giving them the high-level goal of reproduction (including replication). If we regard the whole organism as nothing more than a coordinated network of chemicals together with their mutual interactions (reactions, binding, recognition etc.), this teleological (goal-oriented) function can only be achieved through top-down causation, since it does not exist at the level of chemical interactions. In general any network of biochemical interactions can have several potential functions because it can be an effective cause of several outcomes. According to the downward causation argument, the particular functions of the network that are observed in a living cell have been selected, by the higher level of organisation, from among all the possibilities, and of course the selection criterion is that they match best with the higher-level function. For this to work there must obviously be a) a range of possible effective causes (the equivalence class), b) a higher level function from which to identify c) a goal which can be expressed as a set-point in cybernetic terms and d) a means of influencing the behaviour of elements in the equivalence class e) that correlates with the difference between the present state and the goal. This ‘means of influencing’ is identified with ‘information control’. Jaeger and colleagues point to the substitutability of a biochemical process from one organism to another as an example. Certainly, this example illustrates an equivalence class and it shows that of all the potential functions of the substituted element, one is expressed and it is the one that is most beneficial to the whole organism, implying selection.

How ecosystems develop top-down control

Perhaps an even clearer example of top-down causation can be found in ecology where the niche of every species (more precisely, every phenotype) is determined by its ecological community, which is of course the sum of all other species exerting an influence. An ecological community consists of all the organisms in it, together with all the interactions among them. Whilst many of these interactions are of a material form: transferring food resources form prey to predator, many are not, for example competition merely describes the influence of one organism’s behaviour on the outcomes for another’s. There seems to be some sort of causation by pure information on two levels here: first the communication needed for one organism to influence another without a material transfer between them and second, at a grander scale, the defining of a particular niche by the community, of which any species occupying it is a part.

Taking the first level briefly, it is obvious that for example a cat can prohibit another from sitting on the mat by staring at it in a display of dominance. This is of course communication using a sign and the effect is to alter the ‘mind’ of the cat on the receiving end. Influence by communicated information like this is in a special category because it relies on sophisticated cognition to work (at least mechanism 3 in Ellis’s list). Bacteria communicate by releasing chemical signals into their environment, but this could be thought of as an extension of the chemical signaling network within the cell to include the extra-cellular biotic environment. This kind of signaling may well have been an essential step in the development of multi-cellular organisms. The characteristic way in which such signals are implemented is through the well known lock and key recognition of signaling molecules by their receptor molecules. We can certainly interpret this as a communication channel carrying information which in turn influences biochemical processes in the cell. At every point in the communication, we understand that the information is embodied in the form of molecules, which in turn are a material part of the living system.

The second and higher level of causation is more relevant here because it constitutes diktat from information instantiated at a higher level of organisation, down to that at a lower level. The way it works is that species both create new niches and close them off by occupying them, so in effect an existing assembly of species sets boundary conditions for the ecological sustainability of any population of a new species. The constraint of low-level processes by boundary conditions set at a higher level is common among natural systems and inevitable among synthetic ones, a prime example being, Conway’s Game of Life. Whatever happens in the game is established by the initial conditions which effectively set the boundary conditions for what follows. The living ecosystem is a far more interesting case since here the boundary conditions are not ‘given’ at the beginning, rather they emerge as the community builds and they derive from its member populations, to which they also apply. It is like a club which changes its rules depending on who has already joined and then applies these rules to new applicants. Is this really a case of top-down causation, or is it only a matter of perspective: we find it convenient to aggregate the populations into the level of a community, but, perhaps there is no such thing in reality. In the present case, this is rather easy to answer, because what we call the community is not simply an assembly of organisms, it specifically includes all their interactions and the organisation among them. An ecological community is specified by a very particular network of interactions, unique to it. This network exists at the community scale of organisation, indeed it defines that scale, and it gives rise to measurable properties that are only observable at that scale. As we know, a network of this sort is functional information instantiated in the probability distribution of interactions among its nodes; ultimately among individual organisms in the case of an ecological community. Accordingly, we conclude that an ecological community is a clear case of information which emerged from complexity (the formation of a relatively stable network from component interactions) and therefore exists at a higher level of organisation than the component parts. Further, we conclude that this information is functional in, among other things, setting the ‘rules for entry’ to any prospective population of organisms and is by this, an example of top-down causation. This has led to the, for many ecologists, radical idea that the ecosystem is more than the sum of its parts and in fact that the organising structure has a significant role to play in maintaining resilience and robustness. The idea that there is something functional and yet intangible within an ecological community is discussed on the page The Ma of Ecology. There, we consider the emergence of a TC from the interactions among organisms and review some ecological evidence for it.

Soon, we will publish a page devoted to ecological community composition with reference, for example to niche construction theory (see Odling-Smee, F.J., Laland, K.N. and Feldman, M.W. 1996. Niche construction. Am. Nat. 147, 641 – 648). I hope to work in conjunction with John Kineman on it.

Relation with hierarchical entailments

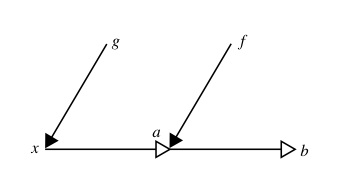

Robert Rosen used category theory-based relational diagrams to show a fundamental difference between sequential and hierarchical compositions of causal entailment.

This relational diagram shows sequential composition. The formal causes g and f operate on (solid arrow heads) what Rosen and followers call material causes (hollow arrow heads). This is effectively a process chain and there is nothing very exciting about it (see the Causation page for further explanation of the diagram notation).

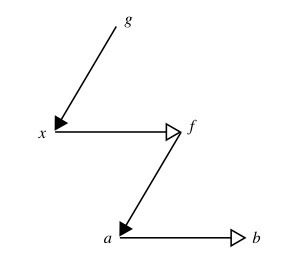

This relational diagram shows the much more interesting case of hierarchical composition. The important difference is that formal cause g acts on material x to create formal cause f which in turn acts on material a to produce b. This is catagorically different from the sequential composion above because it describes the dependence of an efficient cause (f -> a -> b) on another (hierarchically superior) efficient cause (g-> x -> f). The sequential composition is addititive and therefore possible to decompose into its components without loss of information, but the hierarchical composition is irreducable, since if it were collapsed into (g-> stuff -> b) , we would loose critical information about the causal architecture. Both diagrams shown here come from Louie & Poli (2011), where they are labelled (14) and (16) respectively.

In the sequential case, we have essentially a concatenation of mappings, corresponding to a chain of efficient causes (that is why it is additive and therefore decomposable). In the hierarchical case, formal cause, acting to produce efficient cause on one material substrate, results in a second formal cause, which in turn can act on another material substrate. In other words, whilst sequential composition manifests as a chain of transformations of material (in position or composition), hierarchical composition shows the creation of a new formal cause (informational constraint) that can operate on material. This is called hierarchical because the first formal cause is necessary for the latter, which in turn depends upon it, being entailed jointly by it and the material it acts on.

It is not a great leap of the imagination to see that the first formal cause in this system may be embodied at a system level and the second at some hierarchically lower level of constituents of the system. But if the higher level system depends on the lower for its existence, we are immediately plunged into an apparent paradox. We have a formal cause that is necessary for the creation of an hierarchically superior formal cause: a clear case of circular causation. In fact this situation is precisely the one that Rashevsky and Rosen set out to solve in order to understand 'life itself'. TCs, as I have defined them, do not necessarily involve such circular causation, it only crops up if one of the functions of the TC (system level function) is to create a subsystem within it that has at least one function that is necessary for the existence of the system-level TC. So, less specifically, the TC presents formal cause for one or more of its subsystems, but not necessarily in the form of a cycle: that is it does non necessarily depend on the lower level function that it participates in by formal causation. As such, it seems to me that a TC, as I have defined it, is a case of hierarchical composition.

Further Reading on Downward Causation

For a good introduction to causation in general, we recommend Woodward, J. (2003a). Making Things Happen: A Theory of Causal Explanation. Oxford University Press, Oxford, UK.

For Relational Biology, there is no substitute for Rosen's (1991) 'Life Itself', which is good to read along with his 'Essays on Life Itself (2000). For more advanced understanding, we recommend works by A.H. Louie: 'More Than Life Itself' (2009), 'The Reflection of Life' (2013) and 'Intangible Life' (2017).

For Downward Causation, of course we suggest our Chapter 'Living through Downward Causation' p303-333 in 'From Matter to Life: Information and Causality' (2017), Cambridge University Press. Also recommended, the original: Campbell (1974) and Denis Noble's (2012) work.

References

Auletta, G.; Ellis, G.; Jaeger, L. (2008). Top-down causation by information control: From a philosophical problem to a scientific research programme. J. R. Soc. Interface 2008, 5, 1159–1172.Campbell D.T. (1974): ‘Downward causation’ in Hierarchically Organized Biological Systems. p. 179-186. In: Studies in the Philosophy of Biology, Edited by F.J. Ayala & T. Dobzhansky. Macmillan Press.

Cummins, R. (1975). Functional analysis. Journal of Philosophy, 72:741–765.

Ellis, G.F.R. (2011) Top-down causation and emergence: Some comments on mechanisms. Interface Focus. 2, 126–140.

Jaeger, L. (2015). A (bio)chemical perspective on the origin of life and death. In “The role of Life in Death”, Edited by John Behr, Conor Cunningham and The John Templeton Foundation. Eugene, Oregon: Cascade Books, Wipf and Stock Publishers.

Jaeger, L.; Calkins, E. (2012). Downward causation by information control in micro-organisms. Interface Focus 2012, 2, doi:10.1098/rsfs.2011.0045.

Louie, AH. (2013). More than life itself: A synthetic continuation in relational biology. Walter de Gruyter.

Louie, A.H. and Poli, R. (2011). The spread of hierarchical cycles. Int. J. General Systems. 40. 237-261.

Noble D (2012). A theory of biological relativity: no privileged level of causation. Interface Focus, 2 :55-64.

Seeley, T.D. (2010). Honeybee Democracy. Princeton: Princeton University Press.

Walker, S.I. Cisneros, L. Davies, P.C.W. (2012). Evolutionary transitions and top-down causation. In Proceedings of Artificial Life XIII, Michigan State University, East Lansing, MI, USA. 13, 283–290.

Wood, J.M. (2011). Bacterial Osmoregulation: A Paradigm for the Study of Cellular Homeostasis. Annu. Rev. Microbiol. 65:215–38.